One of the most critical files for SEO on a website is the robots.txt file. Therefore, every good SEO must know how to use and apply them in the best way. The most important thing is to know how to use the robot exclusion protocols, to block bots from some pages. In addition, you will be able to optimize the tracking budget and increase the tracking frequency. With this, you will be able to get a higher ranking of the correct page in the SERP competitors ranking.

In this article, we will show you how you can create robots.txt files. We will distribute the information from the simplest to the most complicated. Thus, you can understand everything about the robots txt file. The first thing we are going to teach you is the definition of these files and why they are so important. In addition, we will show you the detailed process of how to create them and some tips to succeed.

- Do you know what is robots txt file?

- Why is it important for SEOs to know about robots.txt?

- Do you know how robots.txt work?

- How can you create the robots.txt files?

- Where is the best place to put these files?

- Some recommendations to succeed in creating these files

- How can DigitizenGrow SEOs create robots.txt files for your page?

1. Do you know what is robots txt file?

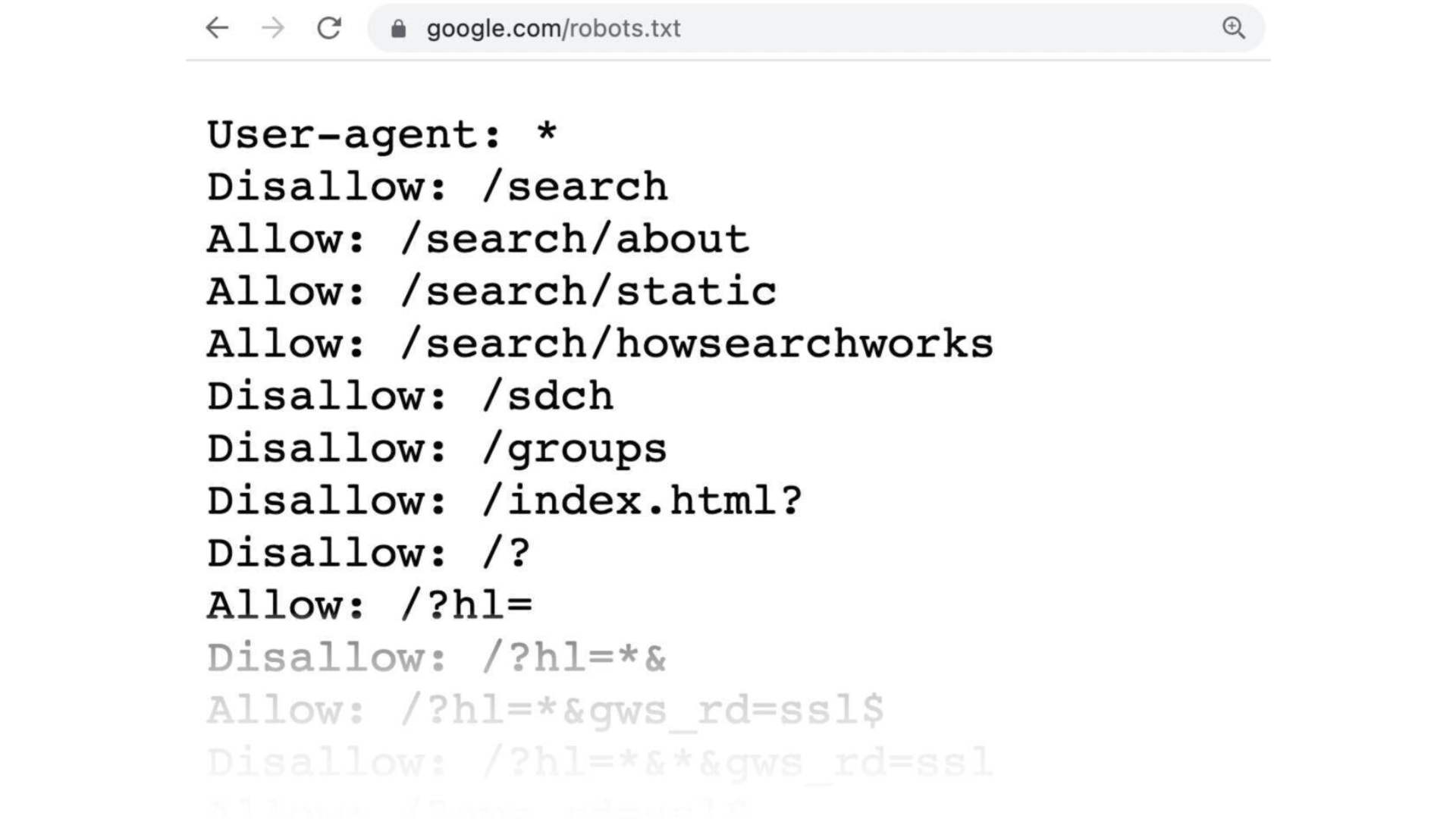

In very simple words, a robots.txt file is an instruction manual for web robots. In this way, it is in charge of informing bots of all kinds, which are the sections of a site that they should and should not track. The robots.txt is mainly used as a code of conduct to control all the activities of robots in search engines.

You can find that major search engines like Google, Bing, and Yahoo check robots.txt files regularly. This way, they can get instructions on how to crawl websites. The instructions are known as directives.

If there are no directives or any robots.txt file, search engines will crawl the entire website, private pages, and all. Despite, most of the search engines being compliant; it is very important to note that sticking with the directives in the robots.txt files is optional. This way, if they want, search engines can ignore this file.

Fortunately, you found that Google is not one of these search engines. Therefore, Google tends to obey all the instructions in a robots.txt file. Thanks to this, performing a search on Google, your search engine will correctly follow the instructions of the file.

2. Why is it important for SEOs to know about robots.txt?

Having a robots.txt file is not critical for many websites, especially those that are small. This happens because generally. Google can find and index all the essential pages on a single site. This way, they will not automatically index duplicate content or pages that do not matter to the search.

However, there is no good reason not to have a robots.txt file, so having one is recommended. Robots.txt files will give you much greater control over what your search engines can and cannot crawl on your website. Also, there are many reasons why it is very useful.

2.1 Allows non-public pages to be blocked from search engines

There are times when you have pages on your site that you want to be indexed. An example is that you are developing a new website in a test environment. Therefore, you want to make sure that this is hidden from users until it is released. However, you may also have login pages of your website that you want to appear in the SERPs.

If this is the case, you can use robots.txt to block these pages from search engine crawlers.

2.2 You will be able to control the search engine crawl budget

If you are experiencing difficulties to index all your pages in the different search engines; then you may have a problem with the crawl budget. In short, search engines are using the time allotted to crawl the content on dead-weight pages of your site.

By blocking URLs that are of tittle use with robots.txt files; the robots that search engines have can spend more than the crawl budget on the pages with higher relevance.

2.3 You can avoid resource indexing

It is known as good practice to use the no-index meta directive to prevent individual pages from being indexed. So the problem is that meta directives do not work well for media resources. Among them are PDF files and word documents.

This is where robots.txt comes in handy. This way, you can add a single line of text to your robots.txt file, preventing search engines from accessing your media files. Therefore, you see the usefulness of these files, but it is important to have an SEO strategist who can help us with everything related to search engines.

3. Do you know how robots.txt work?

Earlier, it was mentioned that robots.txt files function as an instruction manual for search engine robots. That is, it tells search bots where to crawl. Also, if the search crawler encounters a robots.txt file, the crawler will read it and stop crawling.

In case the web crawler does not find the file with this format; that is, if the file does not contain directives that hinder search bot activity, the crawler will continue to crawl the entire site as usual.

To succeed in this trace, the bot or user agent that is the one who applies the instructions must be defined. Subsequently, the rules or directives that the bot must follow must be established. Next, we will describe what these elements are and how they work.

3.1 User agent

The user agent is how specific web crawlers and other active programs are defined on the internet. There are many user agents on the web, including browser and device agents. In addition, it is important that you know that the user agent establishes what is the norm for any search engine.

3.2 Directives

Directives are the code of conduct that the organic SEO or anyone else wants the user agent to follow. That is, the directives are those that define how the search robot should crawl your web page. Directives can vary depending on the bot being used. GoogleBot currently supports the following directives when using robots.txt files.

3.2.1 Disallow

All SEO packages must know the different directives and the first one to mention will be “disallow”. This directive is used to prevent search engines from crawling some pages with a specific URL path or some files.

3.2.2 Allow

The allow directive allows search engines to crawl a specific page or subdirectory, belonging to a section of your website. Without this directive, that element that you want to appear, could not be tracked by the robots txt checker.

3.2.3 Robots txt sitemap

This directive is used by SEOs to specify the location of XML sitemaps in search engines.

3.2.4 Comments

The comment directive is used by humans, but not by search bots. “Comments” allows you to add comments that can be useful for on-page SEO. Also, it is very commonly used to prevent people with access to robots.txt files from removing important directives. In other words, the “comments” directive is used to add some important notes to the files.

3.2.5 Crawl-delay

Finally, the Crawl-delay directive function is used to limit how often a search engine performs the robots txt tester. That is the constancy with which search engines perform web page tracking.

4. How can you create the robots.txt files?

The process to create these types of files is quite simple, but it has many advantages for websites. The first thing you should do is open a text editor. It can be notepad or Microsoft Word. Then save the file with the name “robots”. It is important that you use lowercase and choose .txt as the file type extension.

The second and last step is to add any of the different directives mentioned above. You can use as many as you need. In case you need it, different robots txt generators can be useful to minimize human error.

5. Where is the best place to put these files?

In principle, the robots.txt files should be in the top-level directory of the subdomain being applied. If you are interested in performing subdomain searches, you can use Google search syntax tips. There are some rules that all SEOs should know to correctly locate their robots.txt files, which are:

- Name all files of this type in lowercase and using the name “robots.txt”.

- The file must be placed in the root directory of the subdomain you are referencing.

- You must assign each subdomain of the website its file with this format.

In case your file is not in the main directory; it means that the search engines cannot find the directives. Therefore, they will proceed to crawl the website in its entirety.

6. Some recommendations to succeed in creating these files

Many mistakes can be made in creating these files. Here are some key recommendations.

6.1 Put directives on different lines

To prevent search engines from getting confused when crawling and indexing the directives; you must place each directive in the robots.txt files on a different line, one below the other.

6.2 Specificity wins in most cases

Depending on the search engine the strategies may vary. For example, in the case of Bing and Google, the most granular directive is the one that will win. But, this is not so for all cases. In general, the first matching directive wins.

6.3 Use a group of directives per user agent

The robot files have an advantage in that a user agent can contain several groups of directives. However, to avoid human error, you must indicate the user agent at the beginning; you can then list all the directives that should be applied to that user agent.

6.4 Use wildcards (*) to simplify and specify instructions

When using directives, you are allowed to use wildcards (*). This is to apply different rules to all existing user agents. Thus, you can match URL patterns when declaring directives.

6.5 Use the “$” character to specify a URL

If you want to indicate the end of a URL, you must use the dollar sign ($) once you place the robots.txt path.

6.6 Place a robots.txt file for each subdomain

You should know that the directives for these files only apply to the subdomain, where the robots.txt file is hosted. Also, it is important that you know that all these files must be added to the main directory of each subdomain.

6.7 Do not use noindex in robots.txt files

Previously, Google allowed the “no-index” directive to be placed in robots.txt files. However, as of 2019, the possibility of using it was removed. Therefore, if you were thinking of placing the “no-index” directive, it is best to give up the idea. In case you want to place no-index content in search engines, the best method is to apply a no-index meta robot tag on the page you want to exclude.

6.8 Keep this type of files with a weight of less than 512kb

Google has implemented a size limit for this type of file and it is 512 kilobytes. That is, any content that exceeds this limit will be ignored.

7. How can DigitizenGrow SEOs create robots.txt files for your page?

Currently, many SEO strategies must be implemented for websites to be successful. If you want to have access to a group of SEOs that are professionals and support you in managing your website, the best possible option is DigitizenGrow. To be able to contact us you can call us at +971 43 316 688. We also have an email that you can write to and it is contact@digitizengrow.com.